If you saw the disclosure notice for the flaw CVE-2022-23529, it would have been presented as a remote code execution flaw (via JWT secret poisoning) in the jwt.verify method of a main Node.js package for working with JSON Web Tokens (JWTs). The package in question is Auth0’s node_jsonwebtoken library, released as the jsonwebtoken package on NPM. You may also have seen a follow-up that this flaw has been rejected. The NIST National Vulnerability Database shows a rejected status with a reason of: This issue is not a vulnerability.

I would like to dig into what I think went wrong with this one because it highlights some larger problems in the infosec industry. This is a case study of one current example, but the problems it highlights are certainly not unique to this CVE.

Why isn’t it a vulnerability?

As soon as it came up in internal discussion here at Secure Ideas, I read the write-up from Unit 42 (a threat research organization under Palo Alto Networks’ umbrella), and saw some red flags in the proof-of-concept. I felt like I must be missing something for it to be the issue it was presented as, so I dug into the project’s GitHub repo and compared the patched version to the previous version. I examined the changes to the verify.js that contained the function that was supposed to be vulnerable, as well the new test/jwt.malicious.tests.js added to the project to cover abuse cases, including the one demonstrated in CVE-2022-23529. From there, I recreated the proof-of-concept demonstrating exploitation. Finally, I upgraded the jsonwebtoken package and verified how the patch fixed the issue. This led me to conclude two things:

- 1. That the issue would almost never be exploitable, and

- 2. if it was exploitable, there was a remote code execution flaw present regardless of the flaw.

To understand how I got there, it would be helpful to review Unit 42’s write-up on the flaw.

The code below is my reimplementation of their PoC, but it’s taken nearly verbatim from their write-up.

const token = jwt.sign({"x":"y"}, "some_secret");const mal_obj = { toString: () => { console.log("PWNED!!!"); require("fs").writeFileSync("malicious.txt", "PWNED!!!! Arbitrary File Write on the host machine"); }}try { jwt.verify(token, mal_obj);// ...

The parts of this that are relevant here start with the mal_obj (malicious object) variable being created as a JavaScript object, which has a toString() method. This is then passed into the jwt.verify function as the second argument (the secretOrPublicKey value in the verify method), within which the toString method is called, resulting in the code executing.

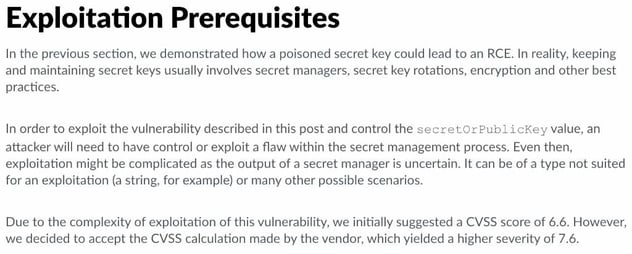

This PoC itself is flawed in that it assumes the ability to create arbitrary JavaScript objects within the context of the server application (or web service). Due public pushback, Unit 42 updated its post to attempt to address this, with the following statement:

The key pieces here are:

> An attacker will need to have control or exploit a flaw within the secret management process. Even then, exploitation might be complicated…

And

> Due to the complexity of exploitation of this vulnerability, we initially suggested a CVSS score of 6.6

To be clear on what this means, no commonly-used data storage or transmission format today will result in the creation of an arbitrary JavaScript object in the context of the server application, unless the server application creates one via a flaw of its own. Strings are specifically identified as a known case where it’s not exploitable. But JSON objects reaching the standard json.parse function will also not create a JavaScript object containing a function, it will throw an error. Type-enforced implementations like gRPC or tRPC or GraphQL won’t let you define arbitrarily-shaped objects either, because it’s validated against a type definition. However, if we suppose that something is present in the secret manager or key rotation process that allows the creation of an arbitrary JavaScript object, the RCE can be trivially achieved before the code ever reaches the jwt.verify method. Considering the PoC above, I could make the following small change to the mal_obj declaration:

const token = jwt.sign({"x":"y"}, "some_secret");

const mal_obj = {

toString: (() => {

console.log("PWNED!!!");

require("fs").writeFileSync("malicious.txt", "PWNED!!!! Arbitrary File Write on the host machine");

})()

}

try {

jwt.verify(token, mal_obj);

// ...

With that change of 4 characters, I’ve made the function execute immediately when the object is created.

Because the behavior of this verify function doesn’t increase the attacker’s abilities beyond what they would require to exploit the issue in the first place, this isn’t really a flaw. This is in much the same way that an administrator being able to create other administrators is generally not a flaw, because the prerequisite access is the same access that is granted by the action.

One more closing consideration is that many standard functions in JavaScript will automatically execute the toString method on an object, it’s part of the runtime’s implicit type conversion. Consider the following example:

const message = `Hello ${username}`;

If the username contained an object in the shape of mal_obj from the PoC, its toString() would be executed there as well. The username in this example could come from another system (e.g. LDAP, or an SSO provider). This has nearly identical risk characteristics to the reported jsonwebtoken flaw, but again - if the application’s retrieval of the data can lead to the creation of an arbitrary JavaScript object, that’s a flaw in the application’s retrieval of the data. Its user in the template literal doesn’t really affect the risk, since the creation of the arbitrary object allows for RCE on its own.

What went wrong here?

Obviously, it’s my opinion that the proof-of-concept was flawed in the first place, but that can be chalked up to a simple error. Researchers will make mistakes from time to time. I certainly make mistakes as well, everybody does. When businesses replace us with AI vulnerability analysis bots, they will make mistakes too. I want to reiterate here that the researcher misjudging the vulnerability isn’t really a problem. Ideally, I think the internal review process at Unit 42 would have caught the issues with the PoC, but I can understand where they might not. From the Exploitation Prerequisites statement, it sounds like Auth0 upgraded the CVSS on it, which I believe has proven to be the wrong move. To their credit, they released a patch quickly and wrote regression tests, but it seems to me that they could have been more critical in examining the reported flaw.

One of the core issues was that there seemed to be an excessive urgency, on the part of tech journalists and industry folks, to be seen reporting on it, which continued after the validity of the issues was called into question. For example, this pull request on the Github advisory database is three weeks old (as of January 30, 2023), and Michael Ermer saw the same problems I did. The community response clearly shows that there’s plenty of support for that assessment. But there’s a bunch of material still around that has amplified the initial claim but failed to critically assess the information or provide timely updates.

Specifically:

-

1. News platforms reporting it as quickly as they could, but then failing to update it as the community scrutiny started to mount. This example was posted on January 10th, and is still being shared actively (e.g. I’ve seen it reposted on Twitter within the past few days), but doesn’t have an update showing the validity of the flaw has been questioned. https://thehackernews.com/2023/01/critical-security-flaw-found-in.html

-

2. I’ve seen a number of blogs by professionals in the security industry that, in an effort to be early and relevant, have essentially regurgitated the original post and proof-of-concept from Unit 42 without adding their own analysis or questioning what appeared to me to be an obvious issue.

That second issue is particularly problematic for me because it undermines trust in security professionals when we amplify something as a critical issue, divert resources to rushing to address it, and we would have known it was unnecessary if we’d just done due diligence. As an industry, we need to be more careful about that. We don’t want it to prevent us from spreading the word when there’s a real issue out there, but there are plenty of folks with the right domain knowledge to assess something like this very quickly. We need to avoid rushing to amplify something we don’t understand. As an industry, we need to do better.

Wrapping it up

Particularly when overly amplified, reported vulnerabilities can lead to reallocating company resources and shifting priorities. Sometimes it’s the right choice to drop everything and address the issue. But other times, like in this example, the real-world exploitability and impact is much less than reported. In a lot of cases, your in-house security team may have the knowledge to make pragmatic decisions about how a vulnerability affects your organization. I’ve been fortunate to be able to work with our Strategic Advisory service (SASTA) clients to help them sift through the noise and gain a better understanding of flaws like this one. My teammates atSecure Ideas would be happy to help, please reach out if we can assist you.

%2c%20How%20to%20Identify%20Them%2c%20and%20How%20to%20Prevent.png)