Custom Tooling at Lightning Speed

Most cybersecurity professionals hear "AI in penetration testing" and immediately think of automated vulnerability scanners or AI-powered report generation. They're missing the real revolution happening right now in security testing, and it's not what you think.

The game-changing impact of AI isn't in automating existing tools or interpreting scan results. It's in democratizing custom exploit development and dramatically accelerating the creation of specialized attack tools. This shift fundamentally undermines one of the oldest assumptions in cybersecurity: that complexity and obscurity provide meaningful protection against attackers.

When "Security Through Obscurity" Meets AI Reality

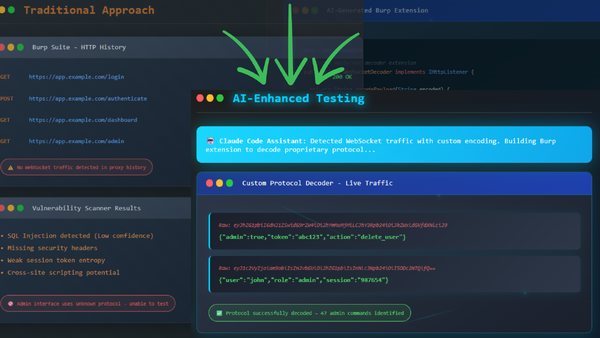

During a recent web application penetration test, I encountered exactly the scenario that illustrates this new reality. The client's application appeared standard enough through my Burp Suite proxy with typical HTTP requests and responses in the main functionality. But the administrative interface was different. No HTTP traffic was being logged for admin actions, which immediately raised questions about how that part of the application actually functioned.

Digging deeper, I discovered the admin interface relied entirely on WebSocket communication using a custom JSON-based protocol with encoded payloads. The data flowing through these connections was completely opaque, just encoded strings that meant nothing without understanding the underlying protocol structure.

In the traditional penetration testing world, this is where many assessments would hit a wall. Custom protocols, proprietary encoding schemes, and unique data formats represent the kind of technical barriers that have historically protected applications from all but the most determined attackers. The assumption was simple: if an attacker couldn't quickly understand and manipulate your custom implementation, they'd move on to easier targets.

That assumption just became dangerously outdated.

Four Hours vs. Four Days: The AI Acceleration Factor

Here's where the story gets interesting. After some research, my team discovered that this client had actually published documentation for their WebSocket protocol. Armed with this reference material, I opened Claude Code and set it to work building a custom Burp Suite extension to decode these payloads.

The AI didn't just help; it fundamentally changed the entire timeline and feasibility of the task.

Within the first hour, I had a basic Burp extension framework in Java with stubbed-out decoding functions. Then I had the AI create a separate Python script to rapidly iterate on understanding the protocol structure, using the published documentation as reference. What's particularly noteworthy is that even before we had the official protocol documentation, the AI was already making progress on decoding the custom format. Pattern recognition and protocol analysis are natural strengths for large language models.

Once the standalone decoding was working perfectly in Python, migrating that logic into the Java-based Burp extension was straightforward. We even discovered a second layer of encoding within the decoded payloads, which the AI handled without breaking stride.

The entire process, from identifying the custom protocol to having a fully functional decoding extension, took approximately four to five hours. Previously, this same task would have required several days of development time, assuming I even attempted it within the time constraints of a typical penetration test.

And here's the crucial detail: the end result wasn't production-ready code. It wasn't pretty, didn't have error handling for edge cases, and I didn't even complete the re-encoding functionality. However, it worked perfectly for the specific use case of decoding every payload to make the previously opaque traffic completely readable.

For penetration testing purposes, "good enough to get the job done" is exactly what matters.

The Democratization of Custom Exploit Development

This experience highlights a fundamental shift in the cybersecurity landscape. AI assistance has lowered the technical barrier for custom tool development in two critical ways:

For Less Experienced Developers: Many penetration testers simply don't have extensive coding backgrounds. They might be excellent at finding vulnerabilities and understanding attack vectors, but building custom tools was often beyond their skill set. AI now enables these professionals to create sophisticated scripts and extensions they never would have attempted before.

For Experienced Developers: Those of us who can code still faced time constraint decisions during assessments. Building a custom tool might take days, and in a time-boxed penetration test, that effort often wasn't justifiable. With AI acceleration, previously impractical custom development becomes not just feasible, but efficient.

The broader implication is sobering: any vulnerability that can be exploited through software can now have that exploitative software built quickly by AI, regardless of how obscure or complex the target system might be.

Attacker Capability Evolution

Rethinking "High Bar" Vulnerability Assessments

Consider the typical risk assessment process when organizations discover new vulnerabilities. CVEs get published with "no known exploits in the wild," and security teams often downgrade the urgency based on that absence of readily available exploit code. The logic has been reasonable: if sophisticated attackers haven't bothered to build exploitation tools, maybe the vulnerability isn't as critical as initially thought.

This flawed reasoning is becoming increasingly dangerous.

AI assistance means that "no known exploits in the wild" no longer indicates that exploit development is technically challenging or time intensive. It now simply means no one has chosen to target that specific vulnerability yet. The technical barriers that once protected obscure protocols, proprietary encodings, and complex custom implementations have largely evaporated.

Organizations that have relied on the complexity of their systems as a defensive layer need to fundamentally reassess that strategy. If your security posture depends on attackers finding your implementation too difficult to understand or too time-consuming to exploit, that protection is eroding rapidly.

The Enterprise Defense Response

Smart security leaders are already adapting to this new reality, but many organizations haven't yet grasped the implications for their defensive strategies. Here's what needs to change:

Risk Registry Reevaluation: Go back through your accepted risks and specifically examine any decisions based on "high technical bar" reasoning. Vulnerabilities that seemed impractical to exploit due to custom protocols or complex implementations need fresh risk assessments that account for AI-accelerated development capabilities.

Penetration Testing Evolution: Ensure your security testing keeps pace with attacker capabilities. This means working with penetration testers who are comfortable leveraging AI tools themselves. If your assessments aren't using the same force multipliers that real attackers now have access to, you're not getting an accurate picture of your actual security posture.

Proactive Technical Debt Management: Those proprietary protocols and custom implementations that seemed to provide security through complexity? They're now just technical debt that's creating blind spots in your security program. Consider standardizing on well-tested, widely-supported protocols and frameworks where possible.

The key realization is that AI doesn't just make existing attacks faster—it makes previously impractical attacks feasible. Custom exploit development is no longer the exclusive domain of advanced persistent threat groups with significant resources. It's now accessible to any attacker with the motivation to target your specific environment.

The Human Expertise Multiplier

It's crucial to understand that AI isn't replacing human expertise in penetration testing, it's amplifying it dramatically. The most effective use of AI in security testing still requires domain knowledge, testing experience, and the ability to recognize what tools need to be built in the first place.

In my WebSocket decoding example, the AI didn't identify the hidden communication channel or recognize that custom tooling was needed. Those insights came from years of penetration testing experience. The AI also didn't determine what information would be valuable to extract from the decoded payloads or how that data could be used to identify actual vulnerabilities.

What AI provided was the ability to rapidly bridge the gap between recognizing a testing opportunity and having the tools necessary to properly evaluate it. This human-AI collaboration model is where the real power lies and it's exactly the capability that sophisticated attackers are already leveraging.

The Professionally Evil Advantage

At Secure Ideas, we've been building custom tools for complex penetration testing scenarios long before AI acceleration became available. I've personally developed Burp Suite extensions like CO2 and Paramalyzer that are used by security professionals worldwide. This background puts our team in a unique position to leverage AI effectively. We understand both what needs to be built and how to build it efficiently.

The combination of deep technical expertise and AI-powered development acceleration means we can tackle testing scenarios that would be impractical or impossible for teams relying solely on automated scanning tools or generic vulnerability assessments. When your organization needs penetration testing that matches the sophistication of modern attackers, you need partners who are comfortable operating at that level.

Most importantly, we understand that the goal isn't to create production-quality software during a penetration test. The goal is to create tools that work well enough to identify and exploit vulnerabilities in your specific environment. AI excels at exactly this type of "good enough for the use case" development, particularly when guided by experienced security professionals who know what questions to ask.

Looking Forward: Adaptation vs. Obsolescence

The cybersecurity industry is at an inflection point. AI-assisted tool development isn't coming, it's already here and being used by both legitimate security professionals and malicious actors. Organizations that adapt their defensive strategies to account for this new reality will maintain strong security postures. Those that continue operating under outdated assumptions about technical barriers and exploit development timelines will find themselves increasingly vulnerable.

The solution isn't to fear AI or try to prevent its use in security testing. The solution is to ensure your security program leverages the same capabilities that attackers now have access to. This means partnering with security professionals who understand both the technical and strategic implications of AI-accelerated threat development.

Custom exploit development used to be a significant differentiator between advanced attackers and everyone else. That differentiation is rapidly disappearing. The question for your organization is simple: are your security assessments keeping pace with this evolution, or are they still operating under the old assumptions about what attackers can and will build?

The answer to that question might determine whether your next vulnerability disclosure becomes a minor remediation task or a major security incident.

Staying ahead of the threat landscape isn't just an advantage, it's essential.

Ready to ensure your penetration testing matches the sophistication of modern AI-assisted attackers? Contact Secure Ideas to discuss how our experienced team of experts leverages cutting-edge tools and techniques to provide the most comprehensive security assessments possible.